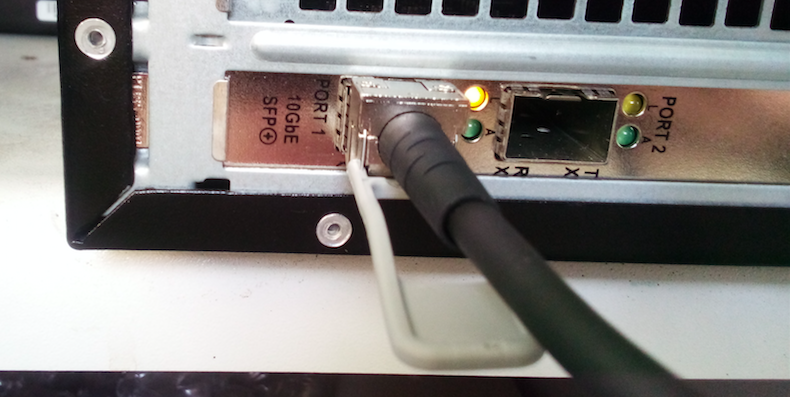

I have a HP dual 10Gb NIC (model number) and also a cisco SFP+ DAC 5m, both from eBay.

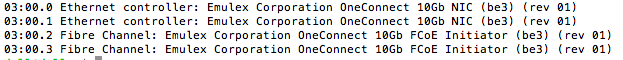

So they are actually CNA’s, useful to know. these are in the FCoE mode.

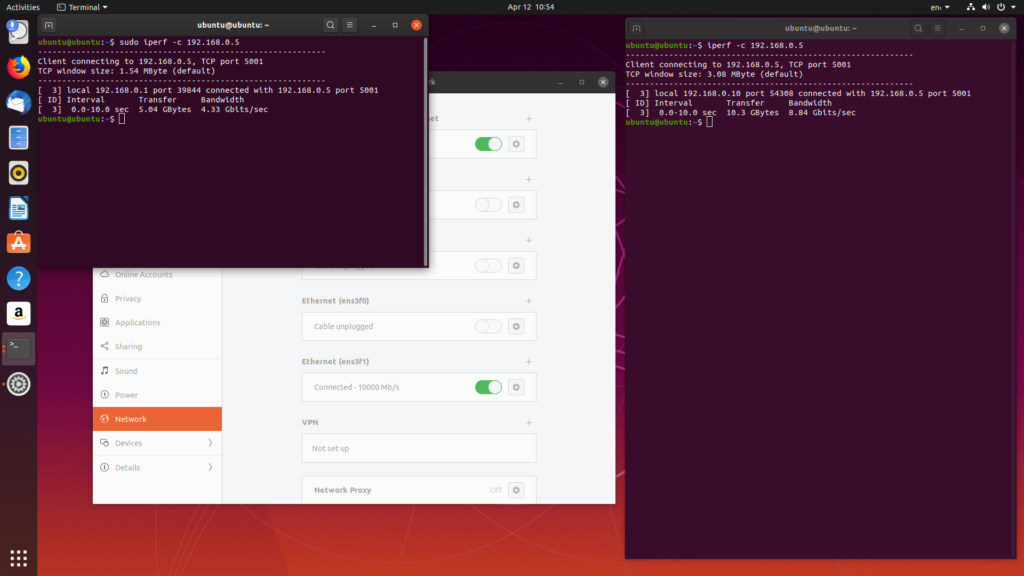

I tested these with a switch but as I only had one 10Gb nic so I went ahead and brought 2 more. Once they arrived I put them in the old xw4600 based workstation and gave them a go with iPerf. The one in the top x16 slot got 8.84 gb/s and in the x8 slot below that got 4.33 gb/s.

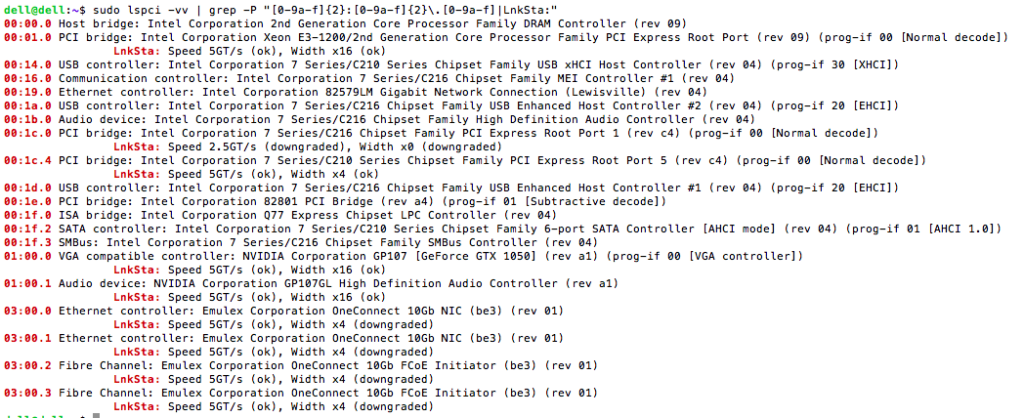

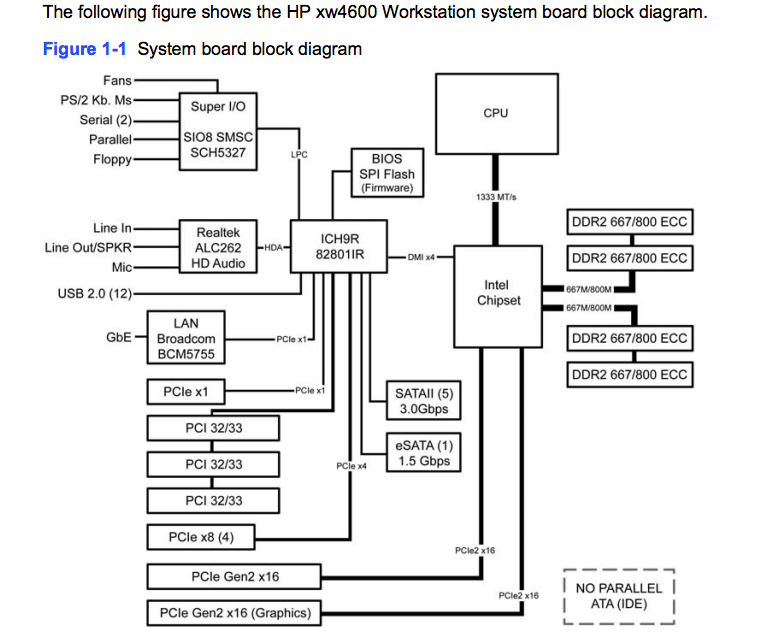

So why is this? Well firstly lets look at the hardware – the x8 slot is PCIe Gen1…and wired only x4. And then x16 slots are Gen2. So the theory says that the x16 slot should be able to run a 10Gbit nic just fine, in x8 mode.

The 8x slot is actually only x4 electrically, and only Gen1, which means a max of 8Gb/s.

| PCIe version | Year | Transfers | Encoding | x1 speed | x4 speed | x8 speed | x16 speed |

|---|---|---|---|---|---|---|---|

| 1 | 2004 | 2.5GT/s | 8b/10b | 2Gb/s | 8Gb/s | 16Gb/s | 32Gb/s |

| 2 | 2007 | 5GT/s | 8b/10b | 4Gb/s | 16Gb/s | 32Gb/s | 64Gb/s |

| 3 | 2010 | 8GT/s | 128b/130b | 7.88Gb/s | 31.52Gb/s | 63.04Gb/s | 126.08Gb/s |

| 4 | 2017 | 16GT/s | 128b/130b | 15.76Gb/s | 63.04Gb/s | 126.08Gb/s | 252.16Gb/s |

| 5 | 2019 | 32GT/s | 128b/130b | 31.52Gb/s | 126.08Gb/s | 252.16Gb/s | 504.32Gb/s |

| 6 | 2022 | 64GT/s | 242B/256B |

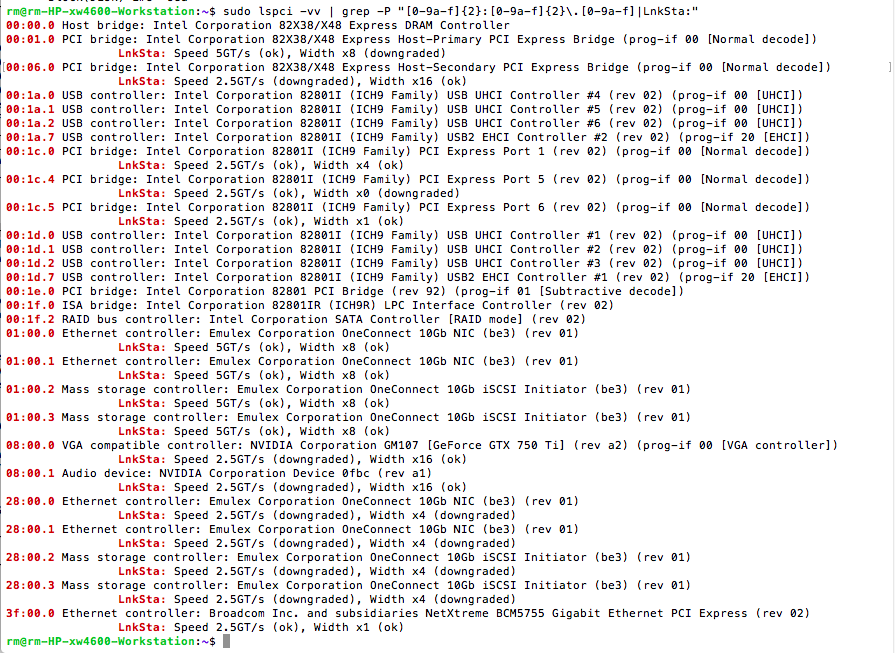

Here’s the output showing the PCI link status’. Top card is running x8 at 5GT/s so Gen2, and bottom card is running x4 at 2.5GT/s so Gen1.

You might notice there are in the iSCSI mode rather than FCoE. The 10Gb nics are actually only Gen 2 anyway. (PCIe x8 Gen2.) See https://support.hpe.com/hpesc/public/docDisplay?docLocale=en_US&docId=c03572683

Great article https://www.infoworld.com/article/2627603/fibre-channel-vs–iscsi–the-war-continues.html

I can also tell you that in an iPerf loopback the Dell 7010 gets 19.8Gb/s, and the xw4600 gets 14.8Gb/s.

So i’m not 100% sure where the bottleneck is. In subsequent testing I managed to get a 9.22Gb/s rate which I think is probably the limit of transfer given the TCP overheads – i’ll try with Jumbo packets.

With an MTU of 9000 I managed to get bursts of 9.90Gb/s. While running htop on both the dell and the xw4600 I noticed that the puney dual core E6550 at 2.33GHz in the xw4600 was pegged at 100%, While the i3-2120 at 3.3GHz in the dell didn’t go above 60%. I’m pretty sure the bottleneck is in the CPU/PCIe workings, after all using a dual core from 2007 probably wasn’t designed to handle 10Gb networking.